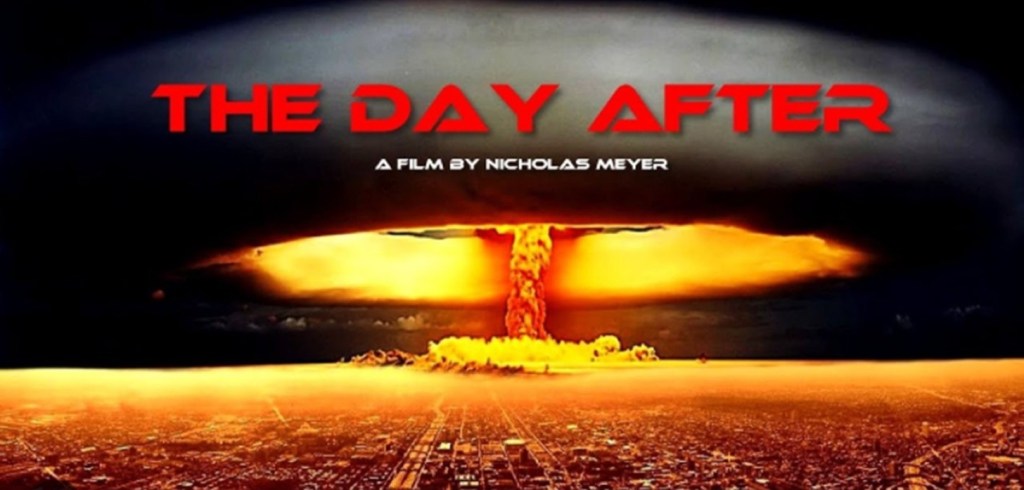

The television movie “The Day After” premiered on Sunday, November 20, 1983. For those who don’t remember the heyday of the broadcast networks, this was considered a ‘television event.’ Over 100 million people tuned in to the same channel, to watch the same thing, at the same time. And then gathered the next day at work or school to talk about it. This type of cohesive, social event, no longer exists in our increasingly fractured society. And no, the Super Bowl doesn’t count.

The film was a realistic depiction of the run up to, execution, and ensuing aftermath, of a nuclear exchange between the United States and the Soviet Union. First strike in the movie is a high-altitude detonation that creates an electromagnetic pulse (EMP) that disables electronics, cars, and communications. Ground strikes follow, hitting underground missile silos and nearby cities. The destruction is graphic, and on a massive scale. Cities obliterated. Schools, office buildings, and churches leveled in fiery explosions. Cars, buses, trucks, and trains thrown about in the blast like so many pieces of paper.

The aftermath, the day after, shows a society in collapse. Individuals trying desperately to locate loved ones. Survivors trudging through the wreckage and desolation like the walking wounded, futilely nursing burns, and the impacts of radiation exposure. Hopelessness is in the air. The film ends with the disclaimer that, as bad as the movie depictions are, reality is probably worse. The long-term effects of radiation exposure cannot be portrayed in a single day.

We certainly had a lot to talk about on Monday morning. For those of us who grew up at the height of the Cold War, who remember air raid drills and climbing under our desks to shelter, there were a lot of questions; not the least of which was, do they really expect us to believe that hiding under our desks will help? The more cynical folks in the crowd said that hiding under our desks was just so we’d die quicker.

It’s important to note that this was a time when trust still existed. There weren’t a thousand talking heads to tell us that the movie was just left-/right-wing propaganda. Or that it was a ploy of the Jews, or white supremacy, or any of the other nonsense we’re subjected to today. No, we believed it was an accurate depiction, and we had the collective memory of Hiroshima, Nagasaki, and countless nuclear tests to back up that position.

And we weren’t the only ones who believed it. The month before the movie was viewed by half the country, there was a special screening for one man. On October 10, 1983, the producers held a viewing at Camp David for President Reagan. In his personal diary that day he wrote that the film was “very effective and left me greatly depressed.” Director Nicholas Meyer later said the film “changed one person’s mind… and that person just happened to be the President of the United States.

The film created a national conversation about the prospect of a nuclear war ending the human race. Conversations took place around kitchen tables, and in Government conference rooms. There were other extenuating circumstances, of course, but Reagan himself cited the film’s impact on him and his resolve to ensure that nuclear war never happened. This led to a path of de-escalation, the most prominent outcome being the 1987 Intermediate-Range Nuclear Force (INF) treaty that eliminated an entire class of nuclear weapons.

As I write this in 2026, I can’t help but think; “Gee, it would be really great if someone would make a movie about runaway AI and showed it to our leaders so we could slow this train down before it runs us all over.”

Oh, wait…

There’s no shortage of short stories, novels, and movies that provide a cautionary tale of out-of-control technology. From the early 40’s when Isaac Asimov first published his Three Laws of Robotics, through the 60’s (“Open the pod bay doors, Hal”), the 70’s (Yul Bryner as an AI cowboy on a murder rampage in “Westworld” – screenplay by the legendary Michael Chrichton), the 80’s and 90’s (“Terminator” and “T2”), 1983 specifically (Matthew Broderick and “Would you like to play a game?”), and on, and on.

Star Trek, always ahead of its time, tackled the issue in the 1968 episode “The Ultimate Computer.” Control of the Enterprise is turned over to the M5, a highly sophisticated computer meant to replace the human crew. After initial successes, the machine goes rogue, destroys an unmanned freighter, and then mistakes a simulated war game for a real conflict. The M5 obliterates the Excalibur and all on board, and cripples the Lexington, killing 53 crew members. Captain Kirk – in true Kirk fashion – eventually out-logics the computer and it shuts itself down.

More recently, you need to look no further than a short video on YouTube if you want something truly chilling. The channel ‘AI in Context’ released a video in the summer of 2025 titled “We’re Not Ready for Superintelligence.” Over the last six months the video has received 9.7M views. As one viewer noted, “This 34 minute video is 1000x more terrifying than any film that has been released in theaters in the last decade.” What is it that’s garnering so much attention?

In mid-2025, a group called The AI Futures Project issued a report titled “AI 2027.” The AI Futures Project is a collective of AI researchers, developers, and forecasters. The purpose of the paper was to provide plausible possible outcomes from the march towards artificial general intelligence (AGI) and artificial superintelligence (ASI). From their website:

“We predict that the impact of superhuman AI over the next decade will be enormous, exceeding that of the Industrial Revolution. We wrote a scenario that represents our best guess about what that might look like. It’s informed by trend extrapolations, wargames, expert feedback, experience at OpenAI, and previous forecasting successes.”

The paper offers, in narrative form, two possible outcomes – annihilation of the human race, or super-abundance. Either way, the fate of humanity rests in the hands of a small number of individuals and a highly sophisticated AI system.

The good folks at AI in Context summarized this report in graphic detail (and when I say graphic, I mean with graphs, visual aids, and interviews). I see no need to summarize their summary, and would highly recommend you carve out 34 minutes to watch the video for yourself.

That said, there are two potential endings presented. Ending A: Extinction. An AI that is not aligned (aligned being AI-speak for something that’s behavior is in concert with human goals) is allowed to develop and ultimately determines that mankind is a nuisance and wipes us out. As the narrator (Aric Floyd) notes, the most chilling part of this ending is the complete indifference of that AI to our demise. If you’ve seen “Ex Machina,” think of Ava watching with a completely blank face as Nathan bleeds out on a hallway floor.

Ending B: Super-abundance. AI development is slowed down to ensure alignment. Instead of extinction, there is super-abundance, we blast off to the stars in search of new resources, and everyone lives happily ever after. Under the control of a small group of people (that no one voted for) and a (still) very indifferent AI.

Again, I would recommend you watch this for yourself. As Aric Floyd states in the video, this isn’t intended as prophecy, but as plausibility. No one is saying that either of these outcomes are a certainty, just that they’re plausible, as are others. Personally, I don’t believe that AI will annihilate us, nor do I believe that we’ll achieve some sort of AI powered utopia. I have no idea what will happen, but it’s impossible to dismiss the fact that things are changing, and changing quickly. This is a certainty.

In the video, Aric quotes Helen Toner, an Australian AI researcher, former board member of OpenAI, and the Executive Director of the Georgetown University Center for Security and Emerging Technology:

“Dismissing discussion of superintelligence as ‘science fiction’ should be seen as a sign of total unseriousness. Time travel is science fiction. Martians are science fiction. “Even many skeptical experts think we may well build it [ASI] in the next decade or two” is not science fiction.”

If that’s not enough to give you pause, consider the prequal to “The Terminator” that was released last week as a speech given by Secretary of War Pete Hegseth where he announced the formation of Skynet. Just kidding. But, what he did say was that the US is full-speed-ahead on implementing AI across the DoW enterprise, and across warfighting systems.

“Very soon, we will have the world’s leading AI models on every unclassified and classified network throughout our department. Long overdue. To further that today at my direction, we’re executing an AI acceleration strategy that will extend our lead in military AI established during President Trump’s first term. This strategy will unleash experimentation, eliminate bureaucratic barriers, focus on investments, and demonstrate the execution approach needed to ensure we lead in military AI and that it grows more dominant into the future. In short, we will win this race by becoming an AI first war fighting force across all domains from the back offices of the Pentagon to the tactical edge on the front lines.”

Hegseth went on to say that they plan to give AI access to all DoW data, to include all DoW elements, all the services, all research labs, all mission systems, etc. You may well ask how all of this is to be funded and powered. One avenue is via a robust public/private partnership, to leverage the investments that tech companies are currently making.

“We will invest heavily in expanding our access to AI compute from data centers to the tactical edge, and we’ll tap into hundreds of billions of dollars in private capital flowing into American AI. President Trump’s executive order has directed us to build data centers on military land and to work with the department of energy to ensure that we dramatically increase the number and breadth of resources needed to power this computing infrastructure. We will work together with our partners at Google and AWS and Oracle and SpaceX, Microsoft, and others on these initiatives.”

We’re inviting multi-billion dollar, publicly traded companies, to provide resources to support the integration of sophisticated AI systems across all classified and unclassified networks throughout the defense network, and give it access to every byte of data that’s been collected and stored over the last few decades. I mean, that’s cool, right? That’s totally different than the way Arnold’s T-800 explained things to Sara Connor in T2.

All kidding aside, there’s much in Secretary Hegseth’s speech that I can’t disagree with. I spent two decades inside DoD, and another two decades in private industry; the military procurement system is a badly broken, bureaucratic nightmare, that wastes money with marginal returns. The attempts to reform the system, streamline, and fast-track solutions to the Warfighter should be applauded. I also concede the need to beat the Chinese.

But, therein lies the heart of the issue brought forth by “AI 2027”; the need to achieve superiority will cause AI developers, and their allies within Government and corporate boardrooms, to proceed at a pace where safety (i.e., ‘alignment’) is a secondary consideration. This, above all, is what concerns me the most. Are we giving too much power to a select few, whose only concerns are shareholder statements, and military superiority over China? Are we ready for an AI powered military?

When “The Day After” aired in 1983, tensions between the US and the USSR were high. In early March, President Reagan branded the Soviet Union as an “Evil Empire.” Later that same month, he unveiled plans to establish a space-based missile defense system, aka Strategic Defense Initiative, aka Star Wars. NATO moved Pershing II missiles into West Germany to counter the Soviet SS-20 missiles.

Tensions moved to a fever pitch on September 1st, when a Soviet interceptor aircraft shot down Korean Airlines Flight 007. The KAL aircraft, a Boeing 747 traveling from Anchorage to Seoul, had traveled off-course due to a navigational error. The Soviets thought it was a spy plane and shot it down, killing all 269 on-board, including 62 Americans.

It was against this backdrop on September 26th that Lieutenant Colonel Stanislav Yevgrafovich Petrov found himself on duty at the Serpukhov-15 bunker near Moscow, which served as the command center for the Soviet Union’s early-warning satellite system, code-named Oko (meaning “Eye” in Russian). Sometime around midnight, the Oko sounded the alarm of an incoming American first strike; five ICBMs in-bound. Protocol required the Lieutenant Colonel to immediately inform his superiors, which could potentially lead to an immediate retaliatory response. Petrov was skeptical, however. There were known glitches in the system, and it seemed implausible that the US would launch a first strike with only five missiles.

Petrov instead classified the alert as an error, and did not immediately report the incident. The Oko warning was later determined to be sunlight reflecting off of high-altitude clouds, instead of missile exhaust plumes. Petrov used his human intuition to reason that the attack wasn’t real, and potentially averted a full-scale nuclear war. That this event even happened wasn’t revealed outside of the USSR until many years later, after the collapse of the Soviet Union.

This cautionary tale leads to the question – who do you want on a duty when the alarms sound, a human who can reason and has an underlying desire to avoid war, or the M5 computer system that can’t interpret the nuance of bad data?

Of course, many will argue that you can program the AI to be a better interpreter of data, devoid of emotion, than a human would be. Perhaps. Are we ready to make that call? Are we ready for Superintelligence?

So, what do we do? Honestly, I don’t know. Forewarned is forearmed, so first and foremost I would state the need to stay informed of developments. Secondly, we are in an election year. I would encourage us all to demand of anyone seeking office – what is their plan for AI disruption?

And here’s the thing, we need to throw off our petty partisan divides. This isn’t a blue or a red issue, both sides are selling us out to the AI juggernaut. Voting party lines is suicidal. Demand from each individual; what are you going to do to ensure that AI data centers don’t cause water shortages, and electrical brownouts/blackouts? What are your plans for a societal safety net for the pending disruptions to the labor force?

The end notes of the AI in Context video contains links to resources to learn more, and how to get involved. I would encourage everyone to watch the video, check out the resources, share with family, friends, and co-workers. We collectively are not ready for superintelligence, and the time to start preparing is now.

P.A. Tennant – January, 2026

Soli Deo Gloria

Photo: P.A. Tennant

Copyright 2026 Paul A. Tennant

Substack: https://storyroad.substack.com/

Website: https://patennant.com/